The realm of artificial intelligence has long been captivated by the promise of reinforcement learning, where agents learn optimal behaviors through trial and error in simulated environments. Yet, the grand challenge has always been bridging the chasm between these meticulously crafted digital worlds and the messy, unpredictable reality they aim to represent. This journey, known as Sim-to-Real transfer, is not merely a technical hurdle; it represents the fundamental frontier of deploying learned intelligence into the physical world.

At its core, the Sim-to-Real problem is a story of discrepancy. A simulation, no matter how sophisticated, is an abstraction. It operates on a set of assumptions, mathematical models, and simplified physics. An agent trained to perfection in such a sandbox becomes a master of that specific virtual universe. However, the real world is fraught with complexities that are notoriously difficult to model with perfect fidelity. The subtle friction of a joint, the slight delay in a motor's response, the unpredictable lighting conditions for a camera, or the chaotic dynamics of air currents for a drone—these are the "reality gaps" that can cause a brilliantly successful simulation policy to fail catastrophically upon deployment. The agent's knowledge, so precise in simulation, becomes brittle and unreliable when faced with sensory noise and physical phenomena it never encountered during its training.

Researchers have approached this challenge not by trying to build the one perfect simulation—a likely impossible feat—but by making the learning process itself more robust and adaptable. One of the most powerful strategies in this arsenal is Domain Randomization. Instead of training an agent in a single, static simulation, this technique involves training across a vast array of randomized simulations. The physical parameters of the world—textures, masses, friction coefficients, lighting angles, sensor noise—are deliberately varied within plausible bounds. The agent is forced to learn a policy that is not overfitted to one specific set of conditions but can generalize across a wide spectrum of possibilities. It's the educational equivalent of preparing for an exam by studying under different lighting, with different pens, and while slightly tired or hungry; you learn the core material so well that the environmental distractions become irrelevant.

Another sophisticated technique involves adding a layer of adaptation through System Identification. Here, the strategy is twofold. First, an agent is trained in a nominal simulation. Upon deployment in the real world, the system quickly runs a series of tests or observes its initial interactions to estimate the real-world parameters that differ from its simulation. These parameters are then fed back to fine-tune the simulation model, creating a tighter feedback loop. In more advanced implementations, the policy itself is trained to be aware of these latent parameters and can adapt its behavior on the fly based on its ongoing experience, effectively learning the "personality" of the real-world system it is controlling.

The quest for robustness has also been significantly advanced by the rise of deep learning models as powerful function approximators within RL. Their ability to distill high-dimensional sensory input (like pixels from a camera) into meaningful features allows agents to focus on task-relevant information while filtering out irrelevant noise. This capability is crucial for crossing the reality gap, as it helps the agent ignore visual artifacts or lighting changes that were present in simulation but differ in reality, focusing instead on the geometric and physical relationships that truly matter for the task, such as the position of an object or the angle of a limb.

Beyond algorithms, the very nature of the simulations is evolving. The adoption of powerful game engines and the move towards photo-realism are helping to narrow the visual reality gap. More importantly, the focus is shifting towards improving the accuracy of physical simulation. Advances in simulating soft-body dynamics, fluid interactions, and complex contact mechanics are creating digital worlds that behave more like their real counterparts. When an agent learns to manipulate a deformable object or walk over uneven, granular terrain in a highly accurate simulation, that knowledge is far more likely to transfer successfully.

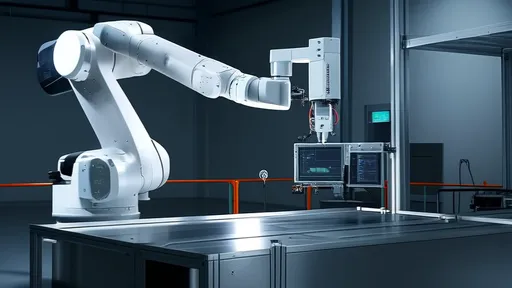

The ultimate test of these technologies is their application in the real world, and the results are increasingly impressive. In robotics, we see Sim-to-Real transfer enabling breathtaking agility. Robots are learning to walk, run, and navigate complex obstacle courses entirely in simulation before being deployed on physical hardware with minimal fine-tuning. In industrial automation, robotic arms are trained to perform delicate manipulation tasks, like assembling intricate components or handling flexible wires, without ever touching the real object during training. This not only slashes development time and cost by avoiding wear and tear on physical systems but also allows for training on dangerous tasks in complete safety.

Looking forward, the future of Sim-to-Real research is moving towards even greater autonomy and generalization. The next frontier is Meta-Learning or "learning to learn," where agents are trained in simulations not on one task, but on a distribution of tasks. The goal is to acquire a prior understanding of physics and problem-solving that is so fundamental that the agent can be dropped into a completely novel real-world environment and rapidly adapt its policy within a handful of trials. This would mark a shift from transferring a specific skill to transferring a general capacity for agile learning.

In conclusion, the Sim-to-Real transfer challenge is a central narrative in the evolution of applied artificial intelligence. It is a multidisciplinary effort, blending insights from reinforcement learning, deep learning, robotics, and physics. The progress is a testament to a shift in philosophy: from seeking a perfect digital replica of reality to building agents that are inherently robust, adaptable, and prepared for imperfection. As the gaps continue to narrow, the boundary between simulation and reality will blur, paving the way for a new generation of intelligent systems that can learn safely and efficiently in a digital world before stepping out to transform our own.

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 26, 2025